|

(1) |

One of the most wide-spread and effective classifiers in statistical learning, logistic regression, can be easily implemented in Alchemy. Here, we only deal with binary predictors and dependent variables, but the extension to these is intuitive as well.

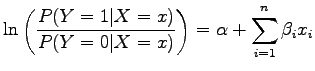

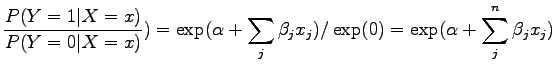

Logistic regression is a regression model of the form:

|

(1) |

where ![]() is a vector of binary predictors and

is a vector of binary predictors and ![]() is the dependent variable.

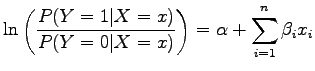

So how do we describe this model in Markov logic? If we look at the model of

Markov networks:

is the dependent variable.

So how do we describe this model in Markov logic? If we look at the model of

Markov networks:

|

(2) |

this implies we need one feature for ![]() and one feature for each

and one feature for each ![]() ,

,![]() in

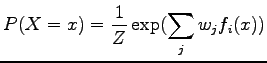

order to arrive at the model

in

order to arrive at the model

|

(3) |

resulting in

|

(4) |

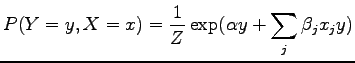

To represent this as an MLN, each parameter is represented as a weight

corresponding to each formula, i.e.

![]() and for each

and for each ![]() ,

,

![]()

We demonstrate this on an example from the UCI machine learning data

repository, the voting-records dataset, which contains yes/no votes of

Congressmen on 16 issues. The class to be determined is ``Republican'' or

``Democrat'' (our ![]() ), and each vote is a binary predictor (our

), and each vote is a binary predictor (our ![]() ). So,

the resulting clauses in the MLN are:

). So,

the resulting clauses in the MLN are:

Democrat(x) HandicappedInfants(x) ^ Democrat(x) WaterProjectCostSharing(x) ^ Democrat(x) AdoptionOfTheBudgetResolution(x) ^ Democrat(x) ...

This predicts whether a Congressman is a Democrat (if Democrat(x) is false, x is a Republican). Alternatively, we could have modeled the connection between the predictors and the dependent variable as an implication, i.e.:

HandicappedInfants(x) => Democrat(x)

These two models are equivalent when we condition on the predictors.

We can perform generative or discriminative weight learning on the mln, given the training data voting.db with the following command:

learnwts -g -i voting.mln -o voting-gen.mln -t voting-train.db -ne Democrat

or

learnwts -d -i voting.mln -o voting-disc.mln -t voting-train.db -ne Democrat

The weights obtained tell us the relative ``goodness'' of each vote as it can predict whether a Congressman is a democrat or not. Given this, we can look at a new Congressman (or several) and his/her voting record and predict whether he/she is a democrat or republican. We run inference with

infer -ms -i voting-disc.mln -r voting.result -e voting-test.db -q Democrat

This produces the file voting.result containing the marginal probabilities of each Congressman being a Democrat.