|

Statistical parsing is the task of computing the most probable parse of a sentence given a probabilistic (or weighted) context-free grammar (CFG). The weights of the probabilistic or weighted CFG are typically learned on a corpus of texts. Here, we demonstrate how to translate a given CFG to an MLN and learn weights for the grammar on a small corpus of simple sentences.

A context-free grammar in Chomsky normal form consists of rules of the form:

|

where ![]() ,

, ![]() , and

, and ![]() are non-terminal symbols and

are non-terminal symbols and ![]() is a terminal symbol.

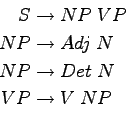

Here, we want to construct a grammar for parsing simple English language

sentences. One such simple grammar might look like this:

is a terminal symbol.

Here, we want to construct a grammar for parsing simple English language

sentences. One such simple grammar might look like this:

|

Here, ![]() stands for sentence,

stands for sentence, ![]() for noun phrase,

for noun phrase, ![]() for verb phrase,

for verb phrase, ![]() for adjective,

for adjective, ![]() for noun,

for noun, ![]() for determiner, and

for determiner, and ![]() for verb. To

translate this into an MLN, we first encode each production rule in the grammar

as a clause:

for verb. To

translate this into an MLN, we first encode each production rule in the grammar

as a clause:

NP(i,j) ^ VP(j,k) => S(i,k) Adj(i,j) ^ N(j,k) => NP(i,k) Det(i,j) ^ N(j,k) => NP(i,k) V(i,j) ^ NP(j,k) => VP(i,k)

Here, i and j indicate indices between the words, including at the

beginning and at the end of the sentence. So, a sentence with ![]() words has

words has

![]() indices. For example, the words in the sentence ``The dog chases the

cat'' would be indexed as

indices. For example, the words in the sentence ``The dog chases the

cat'' would be indexed as ![]() The

The ![]() dog

dog ![]() chases

chases ![]() the

the ![]() cat

cat ![]() .

.

In addition, we need a lexicon for our text telling us the parts of speech of each word. For our small corpus, it looks like this:

// Determiners

Token("a",i) => Det(i,i+1)

Token("the",i) => Det(i,i+1)

// Adjectives

Token("big",i) => Adj(i,i+1)

Token("small",i) => Adj(i,i+1)

// Nouns

Token("dogs",i) => N(i,i+1)

Token("dog",i) => N(i,i+1)

Token("cats",i) => N(i,i+1)

Token("cat",i) => N(i,i+1)

Token("fly",i) => N(i,i+1)

Token("flies",i) => N(i,i+1)

// Verbs

Token("chase",i) => V(i,i+1)

Token("chases",i) => V(i,i+1)

Token("eat",i) => V(i,i+1)

Token("eats",i) => V(i,i+1)

Token("fly",i) => V(i,i+1)

Token("flies",i) => V(i,i+1)

If we left it at this, then two problems would occur. First, if there are two

or more production rules with the same left side (such as

![]() and

and

![]() ), then we need to enforce

the constraint that only one of them fires. This is achieved with a rule of the

form:

), then we need to enforce

the constraint that only one of them fires. This is achieved with a rule of the

form:

NP(i,k) ^ Det(i,j) => !Adj(i,j)

Basically, this is saying ``If a noun phrase results in a determiner and a noun, it cannot result in and adjective and a noun''. The second problem involves ambiguities in the lexicon. If we have homonyms belonging to different parts of speech, such as Fly (noun or verb), then we have to make sure that only one of these parts of speech is assigned. We can enforce this constraint in a general manner by making mutual exlcusion rules for each part of speech pair, i.e.:

!Det(i,j) v !Adj(i,j) !Det(i,j) v !N(i,j) !Det(i,j) v !V(i,j) !Adj(i,j) v !N(i,j) !Adj(i,j) v !V(i,j) !N(i,j) v !V(i,j)

We now have a complete MLN (consisting of the grammar and the lexicon) for which we can learn weights based on training data. We treat each sentence as a separate database, thus the call is

learnwts -d -i cfg.mln -o cfg-out.mln -t s1-train.db,s2-train.db,..., s12-train.db -ne NP,VP,N,V,Det,Adj -multipleDatabases

This produces the weighted CFG in cfg-out.mln with which we can parse our test sentences:

infer -m -i cfg-out.mln -r parse.result -e test/s1-test.db -q NP,VP,N,V,Det,Adj

The extension to weighted definite clause grammars is intuitive because Markov

logic can capture relations between parts of speech by simply adding an

argument to the predicate. For example, if we want to enforce noun-verb

agreement, we would add the number to the noun phrase and verb phrase

predicates, i.e. NP(pos, pos, num) and VP(pos, pos, num) and our

top-level rule would become NP(i,j,n) ![]() VP(j,k,n) => S(i,k).

VP(j,k,n) => S(i,k).